Capstone Project: Week 7, Roadblock and Resolution (Cloud Troubleshooting)

Hello and welcome to Week 7 of development for my Capstone Project: Continuous Integration/Continuous Deployment Pipelines. This week, as you may have guessed, featured my first major issue, however, it also features a resolution!

This post will go into detail about my troubleshooting efforts, but to spare the reader from having to dive deep into the post to scrounge up information on my progress, I will detail the outcomes of this week first.

Outcomes

Updates & Changes to my Project:

- New Application - I have sourced the skf-labs repository for use as the base application for my project.

- Container Registry - I will, at least for the time being, will be using Docker Hub instead of Azure Container Registry for my container registry.

- Pipeline Adapted for New Application - Old pipeline, for the most part, is successfully integrated into this new application in a new Azure DevOps project.

- Final Project GitHub Repo - New GitHub repo is hosted here

This week was a great week and I have gained a lot from it. Here is a list of skills and knowledge I have gained from what I can think of just off the top of my head:

- Cloud Troubleshooting - Reading error logs from Azure App Service, DevOps Pipelines. Testing Azure App Service + Instances for Azure Container Registry. Comparing working images with non-working ones. Adding configuration keys to Azure App Service Instances.

- Re-exploration of Flask - These SKF Lab applications are running Flask, and I modified them to combine two of them into one single application. I also learned about default flask ports and how to change them.

- Application Vulnerabilties - I messed with a few of the SKF Labs applications and did the appropriate application exploitation steps and saw the effects of them, helping to cement my understanding of Application Security.

- Teamwork & Communication - Aristher is experienced with AWS, but not Azure, so I had to explain my issue while also helping him understand the intricacies of Azure. We were able to get to work relatively swiftly with him having full understanding of my project and the necessary parts of Azure.

- Docker - My Dockerfile, after many bouts of testing, was a complete mess, but it gave me a lot more experience in crafting it. I also now have a good grasp of the difference between the CMD and ENTRYPOINT commands in the Dockerfile.

New Application - SKF Labs

I have finally found a wonderful and much more simple application to use for my pipeline. This application is actually a collection of small intentionally vulnerable web applications that utilize Flask. These applications are based off of the OWASP Security Knowledge Framework to help users learn how to integrate security into your applications.

As you can see in SKFLab 1, I am able to commit a Directory Traversal attack by modifying the file the code wants to access within Chrome dev tools and changing it to /etc/passwd. This is due to an intentional improper configuration of the code.

By removing the line above, I am no longer to be able to commit the directory traversal! This alone should help me display my fixing of the code.

The issue, as explained in the write-ups website that explains how to use these applications, states the following:

In the code example the "filename" parameter that is used to read content from files of the file system is under the users control. Instead of just reading the intended text files from the file system, a potential attacker could abuse this function to also read other sensitive information from the web server.

Code:

1@app.route("/home", methods=['POST'])

2def home():

3 filename = request.form['filename']

4 if filename == "":

5 filename = "default.txt"

6 f = open(filename,'r')

7 read = f.read()

8 return render_template("index.html",read = read)

Combining Two of Them

While these are exactly what I need, I am worried that just one vulnerability might be too little, or it may not be detected at all by my scanners. As such, I decided to combine two of them into one, with the possibility to add more later.

The second application I chose was one that allowed for a Cross Site Scripting Attack (XSS), which simply allows the user to run code even if they are not supposed to, for various purposes and in various ways. If you want to check out XSS, OWASP is your friend!

SKFLab 2 demonstrates me running the following code in the text box which should generally not work. In the text bos I ran the following JavaScript code: <script>alert(123)</script>

After some time of exploring Flask again, I was able to combine both of the vulnerabilities into one application. I also fixed up some things that made for a buggy application, like the application crashing if you send a GET request to a route that only allows for the POST method. I developed this on my Linux virtual machine which had pip and python already installed. I verified that both of them still work!

And finally, to make sure everything still ran, I built a docker image of the application and everything worked splendidly! I now have my application for my project.

Adapting New Application for Pipeline

So, now that I have my new application, the next thing I needed to do was set up the actual project and adapt the pipeline for it. Thankfully, my original pipeline works with any language so long as you want to build it into a container, so everything should have been fine.

After some issues with dealing with my pipeline not running due to the following error,

There was a resource authorization issue: "The pipeline is not valid. Job Deploy: Variable group Release could not be found. The variable group does not exist or has not been authorized for use. For authorization details, refer to https://aka.ms/yamlauthz

The pipeline went on its merry way. I won't go into detail about it, as it was just simple mistakes on my part. After fixing it, the pipeline worked, but there is an issue with hadolint, in which it detects an error and fails. Just for times sake I allowed the pipeline to continue on error, which allowed the pipeline to move on anyway. I see now though that hadolint won't produce to the console the output, but it will generate a text file. I can see I have the following errors for now:

1-:4 DL4000 [1m[91merror[0m: MAINTAINER is deprecated

2-:7 DL3018 [1m[93mwarning[0m: Pin versions in apk add. Instead of `apk add <package>` use `apk add <package>=<version>`

3-:7 DL3019 [92minfo[0m: Use the `--no-cache` switch to avoid the need to use `--update` and remove `/var/cache/apk/*` when done installing packages

4-:11 DL4000 [1m[91merror[0m: MAINTAINER is deprecated

5-:14 DL3059 [92minfo[0m: Multiple consecutive `RUN` instructions. Consider consolidation.

6-:15 DL3059 [92minfo[0m: Multiple consecutive `RUN` instructions. Consider consolidation.

7-:31 DL4006 [1m[93mwarning[0m: Set the SHELL option -o pipefail before RUN with a pipe in it. If you are using /bin/sh in an alpine image or if your shell is symlinked to busybox then consider explicitly setting your SHELL to /bin/ash, or disable this check

8-:31 SC2038 [1m[93mwarning[0m: Use -print0/-0 or -exec + to allow for non-alphanumeric filenames.

9-:34 SC2038 [1m[93mwarning[0m: Use -print0/-0 or -exec + to allow for non-alphanumeric filenames.

10-:34 DL4006 [1m[93mwarning[0m: Set the SHELL option -o pipefail before RUN with a pipe in it. If you are using /bin/sh in an alpine image or if your shell is symlinked to busybox then consider explicitly setting your SHELL to /bin/ash, or disable this check

However, the rest of the pipeline went on and was successful, yay! Well, it says it was. But when I went to check on my App Service Instance: Capstone Final, I was unfortunately greeted with this:

And so began my 1:00pm to 5:00am troubleshooting which continued until about 7:00pm the next day.

Troubleshooting

The first thing I did to troubleshoot my application error was to look back at my pipeline logs to make sure nothing odd had happened, which reported nothing bad. I then went on to verify that the container image was actually in my container registry, which it was.

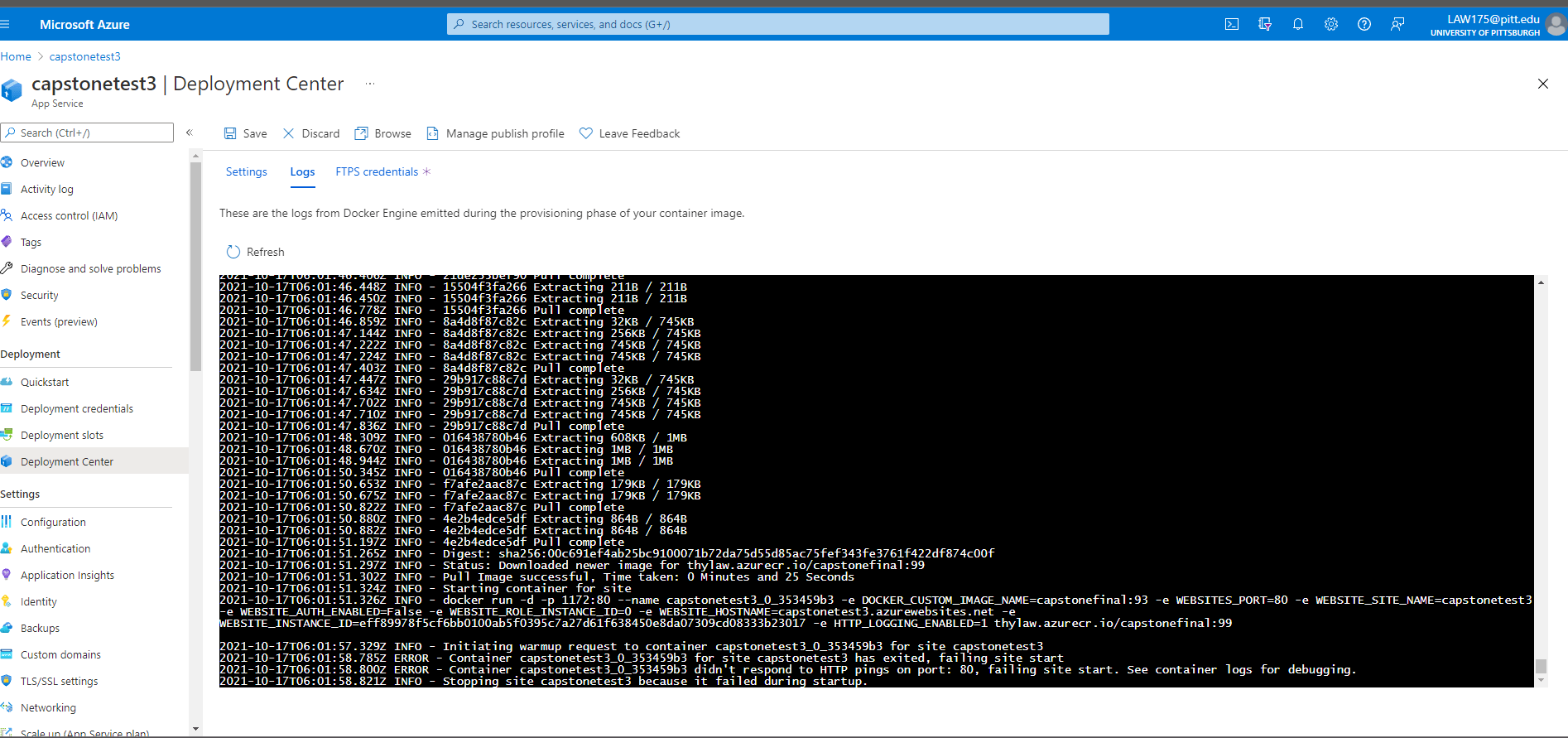

With that in mind, I decided to look at the logs. Azure App Service has two places to store logs, one called Log Stream under monitoring which you have to enable with filesystem logs, and one under Deployment Center to monitor deployments, but this is veiled by the DevOps Deployment if you have that enabled, like so:

The Log Stream quickly pointed out my issue, it wasn't able to find the app.py file and as a result, the container was not responding to requests. HOWEVER, my brain completely disregarded the "can not open x" log and focused on the ports, as I had a potential idea of what went wrong then.

The port 80, which is the port for HTTP, is the default used for internet access to websites. Looking at the docker run command in the above error log, I could see that Azure was attempting to talk to the container on port 80, but for local development I had used port 5000. After trying to run the same command with the same port locally, the container obviously did not work.

My initial impression was that this application inherited from the alpine linux image used in the Dockerfile, and I was worried I would not be able to edit it. However, after a bit of research, I found out that Flask utilizes port 5000. I was able to change the code like so to make the port that Flask listens on port 80:

1if __name__ == '__main__':

2 app.run(host='0.0.0.0', port=80)

And just like that, it worked on my local development. While I was down here, I also decided to add in the line EXPOSE 80 5000 in my Dockerfile, which exposes ports for the application to be able to listen externally. I gleefully pushed my changes up to my repository, which ran through the building and deployment process, and I was once again greeted with the same error. No change.

With that not working, I decided maybe I should leave it as 5000 and configure Azure App Service to connect to it on Port 5000 instead of 80. I was able to do this by changing the Application Settings with the following command:

--resource-group <group-name> --name <app-name> --settings WEBSITES_PORT=5000

Which resulted in the change here:

With that change added, Azure App Service will now start your container on the port you specify. I modified my files back to the defaults of port 5000 and pushed it up, but, as you might have guessed, it did not work.

Another change I did at this time was I changed the XSS.py file to app.py which you see in the logs. I read that the Python server Azure uses, gunicorn, looks for this filename by default, so I figured it would be worth a shot.

With none of these steps working even though they should, as nothing was setup differently from my JuiceShop Application, I decided to pull down the image directly from my Azure Container Registry to make sure that it works.

30 minutes later of being unable to pull it due to me using thylaw/azurecr.io/capstonetest3:93 instead of thylaw.azurecr.io/capstonetest3:93 and forgetting how to login, I was able to pull the image and build and run it successfully on my local computer:

At this point I already felt pretty stumped. Everything is working how it's supposed to, and clearly my pipeline built the container correctly. Running out of things to try, I decided to just push to the Container Registry manually with a built image from my local files to confirm there were no issues with Azure DevOps.

I then created another App Instance which directly pulled from the container registry and was not automatically deployed, as shown below.

This became pretty useful as it allowed me to quickly test changes without having to wait for the pipeline to finish. It also allowed access to the deployment center logs, which shows more about the pulling of the contaienr than the logstream logs.

Unfortunately, this did not work either, and the same errors were occurring. With no other idea of what to do at the time, I decided to contact my friend Aristher to see if maybe he could see something I was missing.

After walking through my project thus far and explaining some of the services of Azure I was using, we got to work. We came up with the idea to try and SSH into the App Service that was hosting the container to see what was going on in there because it was clearly not find the app.py even though it should be where the Dockerfile was.

Unfortunately, the SSH feature does not work my default, and you need to create an ssh daemon as per the documentation by Microsoft here. While we believe this would have worked, as the Dockerfile still built fine when converting the starting file to be the shell script instead of the app.py, we were unable to SSH into the Container Service because it needs to be running, which it won't, as it won't start.

We further tried the Microsoft resources from here which offered other methods of SSHing into the App Service, but we couldn't as it wasn't running.

We then decided to see if all of our files were going to the right place locally. So, I brought down the container from the Azure Container Registry and shelled into it with the command docker exec -it thylaw.azurecr.io/capstonefinal:100 bash.

We verified that the files were exactly where we wanted them to be, and the entrypoint was exactly where app.py and the Dockerfile were. There was no difference, and we were at a loss with no way to SSH in and the image being the exact same. I let Aristher leave when we couldn't come up with anything, and I decided to call it off for that night and try to create a simple Flask application that worked with Azure App Instance and compare my findings with my current project the next day... but I didn't end up calling it off at all.

I had earlier crafted a text document with all of the steps I had done thus far and sent it to my friend Tess, as well as an explanation of the problem. After some testing with my Dockerfile and parsing through logs from Azure DevOps, the log streams, and the docker history, she confirmed that my Dockerfile was correct, though sloppy, and should work.

I began to lose hope at this point, and tried some more desparate and improbable tests, like creating just an instance of my docker container directly from my registry (not Azure App Service), and putting PORT 5000 in my application settings on top of the WEBSITES_PORT 5000 like before. However, Tess said the most important line of the weekend:

I'd be curious to see how the Azure engine would handle docker.io/blabla1337/owasp-skf-lab:sqli

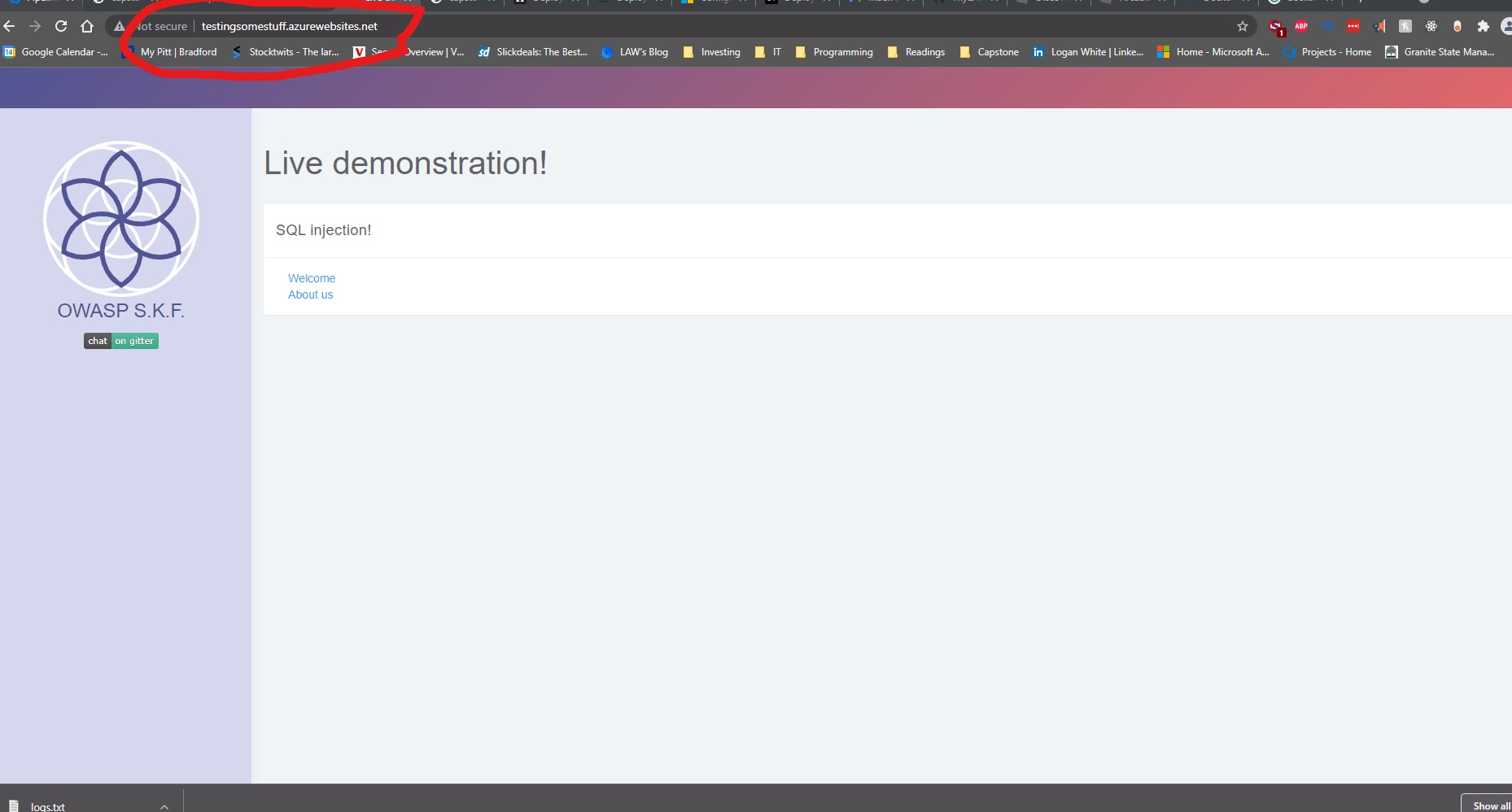

It was late, and I was tired, but I decided to create an Azure App Instance of the docker.io/blabla1337/ repository Tess linked to me. This container is hosted on Dockerhub, which Azure has integration to allow you to easily pull from it. It turns out this application is hosted by the creators of the application on there, which I did not know they had an official container repo.

I pulled the container and flipped the ports to be 5000 instead of 80, and to my absolute surprise, it worked!.

With that in mind, I pulled down the image from Dockerhub to my local machine and ran it as well as my local container and compared my findings.

I did notice that there was one difference between them, and that it was the image from Azure did not set execute permissions on the Dockerfile, but there were execute permissions on my local one. I modified the pipeline to make sure that the Dockerfile was explicitly given at least execute permissions by modifying the permissions command like so:

RUN find . -name "*.sh" -o -name "*.py" -o -name "Dockerfile" | xargs chmod +x

I later found out that the Dockerfile only needs read permissions, but it was worth a shot. I also cleaned up my Dockerfile to pretty much match exactly what was in the Dockerfile from the official creators, which is the same as what I had started with only with a change in files. This application was just a different one from the one I was using, so don't mind the different names and extra file.

Despite the changes, it still did not work. After some more testing, I decided to just try out using Dockerhub with my application.

Resolution

I pushed my container to Dockerhub with the tag thylaw/capstonefinal and then once again manually brought down my container with Azure App Service, only this time it was linked to Docker Hub and not Azure Container Registry. Low and behold, it worked.

My application works when it is hosted on Dockerhub, but not on Azure Container Registry. I can pull this container from Azure Container Registry and have it work, but it won't work when deployed to the Azure App Service like how my JuiceShop application was. I spent the next few hours trying to figure out the reason why, and I did a couple more tests like changing the appSettings in the pipeline and configuring new permissions with ACR - Identity and Roles with no luck, but I have given up for now, as I have a resolution.

I am, at least for now, converting to Dockerhub

I don't understand the wizardry behind what is happening here, and I don't think I need to keep wasting time on it. Dockerhub is perfectly usable, and I am able to implement it into my pipeline. I really want to know what the heck is going on, so I created a question on the Microsoft forums in hopes someone can tell me, but I've spent way too long on it.

With that in mind, I spent the rest of my day incorporating Dockerhub into my pipeline and making sure that it deploys successfully.

I changed my build and deploy stages in my pipeline to be like so:

1- stage: "Build"

2 displayName: "Build and push"

3 dependsOn: Test

4 jobs:

5 - job: "Build"

6 displayName: "Build job"

7 pool:

8 vmImage: "ubuntu-20.04"

9 steps:

10 - task: Docker@2

11 displayName: "Build and push the image to container registry"

12 inputs:

13 command: buildAndPush

14 buildContext: $(Build.Repository.LocalPath)

15 repository: thylaw/$(webRepository) #need to include thylaw now

16 dockerfile: "$(Build.SourcesDirectory)/Dockerfile"

17 containerRegistry: "DockerHub Registry Connection" # new registry connection

18 tags: |

19 $(tag)

20

21- stage: "Deploy"

22 displayName: "Deploy the container"

23 dependsOn: Build

24 jobs:

25 - job: "Deploy"

26 displayName: "Deploy job"

27 pool:

28 vmImage: "ubuntu-20.04"

29 variables:

30 - group: Release

31 steps:

32 - task: AzureWebAppContainer@1

33 inputs:

34 appName: $(WebAppName)

35 azureSubscription: "Resource Manager Capstone Final"

36 imageName: docker.io/thylaw/capstonefinal:$(build.buildId) # explicitly stated for now, will convert to variables later

With that, everything works successfully. You can see here my repository being continuously updated with each new push:

And with a final test, let's demonstrate the pipeline working by changing the index.hmtl file.

And just like that, my application is updated (note the previous application image):

With that, I have successfully identified my issue and worked around it and I can continue on with my project!

Conclusion

Overall, a very successful week! Very happy with what I have so far and I am excited that I have my final application, though I am sure I will be making edits to it soon. This means I can completely focus on additions to my project, how exciting!

That's it for Week 7, thank you for reading, and see you next week!

Shoutouts

I want to extend my gratitude towards my friend who helped me decide to do this as a project, Aristher Manalaysay, for working with me for a couple hours to try and diagnose what was going on with the project. While we didn't come up with a resolution at the time, we were able to cross off many possibilities as to what was going wrong. Thanks Aristher!

And of course I need to also thank my friend Tess Sluijter-Stek who offered a lot of advice and assured me that my Dockerfile is correct. She also offered up the idea of exploring the official containers hosted up on Dockerhub, which I may not have even thought of, and is what led me to come to my conclusion. Thanks again Tess!

My fallen test applications that I quickly built and destroyed:

CapstoneFinal (auto deployments) capstonetest2 (manual pull from acr) capstonetest3 (manual pull from acr) capstonetest4 (manual pull from dockerhub, capstonetestdevopsdeploydockerhub (built and pushed docker container from pipelines manual pull), testingsomestuff (original pull from creators dockerhub), multiple instances of each container testjuiceshop (deploy app service directly from acr)

Azure Container Registry Logo by Microsoft Corporation SKF Logo (Edited) by Security Knowledge Framework